AI (of ChatGPT fame) is increasingly being utilized in medicine to better disease diagnosis and therapy, as well as to reduce unnecessary screening for patients. However, AI medical devices may damage patients and increase health inequities if they are not created, evaluated, and used with caution, according to an international task committee that included a bioethicist from the University of Rochester Medical Center.

Jonathan Herington, Ph.D., was a member of the Society for Nuclear Medicine and Medical Imaging’s AI Task Force, which released two papers in the Journal of Nuclear Medicine outlining guidelines on how to design and deploy AI medical devices ethically. In summary, the task force advocated for greater transparency about AI’s accuracy and limitations, as well as strategies to ensure that all people, regardless of color, ethnicity, gender, or wealth, have access to AI medical devices that work for them.

While AI developers bear the burden of proper design and testing, healthcare providers are ultimately accountable for correctly utilizing AI and should not depend too much on AI predictions when making patient care choices.

“There should always be a human in the loop,” said Herington, who is an assistant professor of Health Humanities and Bioethics at URMC and was one of three bioethicists added to the task force in 2021. “Clinicians should use AI as an input into their own decision making, rather than replacing their decision making.”

This necessitates that clinicians fully comprehend how a certain AI medical device is supposed to be used, how well it performs at that task, and any limitations—and that they pass that knowledge on to their patients. Doctors must balance the relative risks of false positives versus false negatives in a specific case while accounting for underlying disparities.

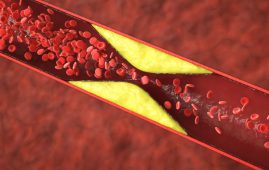

When utilizing an AI system to identify probable malignancies in PET scans, for example, healthcare clinicians must understand how effectively the system identifies this specific sort of tumor in individuals of the same sex, race, ethnicity, and so on as the patient in question.

“What that means for the developers of these systems is that they need to be very transparent,” said Herington.

According to the task force, it is the responsibility of AI developers to provide consumers with accurate information about their medical device’s intended use, clinical performance, and limits. One approach they offer is to incorporate notifications into the device or system that notify consumers about the degree of uncertainty in the AI’s predictions. This could resemble heat maps on cancer scans, which show which areas are more or less likely to be cancerous.

To reduce uncertainty, developers must carefully specify the data used to train and test AI models, as well as utilize clinically relevant criteria to assess the model’s performance. It is insufficient to just validate the algorithms utilized by a device or system. Artificial intelligence medical gadgets should be examined in so-called “silent trials,” in which their effectiveness would be reviewed by researchers on real patients in real-time, but their predictions would not be provided to healthcare providers or used in clinical decision-making.

AI models should also be designed to be useful and accurate in all scenarios in which they will be implemented, according to developers.

“A concern is that these high-tech, expensive systems would be deployed in really high-resource hospitals, and improve outcomes for relatively well-advantaged patients, while patients in under-resourced or rural hospitals wouldn’t have access to them—or would have access to systems that make their care worse because they weren’t designed for them,” said Herington.

Currently, AI medical devices are being trained on datasets with a low representation of Latino and Black patients, which means the devices are less likely to generate correct predictions for patients from these groups. To avoid worsening health disparities, developers must guarantee that their AI models are calibrated for all racial and gender groups by training them on datasets that represent all of the populations that the medical device or system will eventually serve.

Though these suggestions were created with nuclear medicine and medical imaging in mind, Herington feels they may and should be applied to any AI medical devices.

“The systems are becoming ever more powerful all the time and the landscape is shifting really quickly,” said Herington. “We have a rapidly closing window to solidify our ethical and regulatory framework around these things.”

For more information: Ethical Considerations for Artificial Intelligence in Medical Imaging: Data Collection, Development, and Evaluation, Journal of Nuclear Medicine

https://doi.org/10.2967/jnumed.123.266080

more recommended stories

New Blood Cancer Model Unveils Drug Resistance

New Blood Cancer Model Unveils Drug ResistanceNew Lab Model Reveals Gene Mutation.

Healthy Habits Slash Diverticulitis Risk in Half: Clinical Insights

Healthy Habits Slash Diverticulitis Risk in Half: Clinical InsightsHealthy Habits Slash Diverticulitis Risk in.

Caffeine and SIDS: A New Prevention Theory

Caffeine and SIDS: A New Prevention TheoryFor the first time in decades,.

Microbial Metabolites Reveal Health Insights

Microbial Metabolites Reveal Health InsightsThe human body is not just.

Reelin and Cocaine Addiction: A Breakthrough Study

Reelin and Cocaine Addiction: A Breakthrough StudyA groundbreaking study from the University.

Preeclampsia and Stroke Risk: Long-Term Effects

Preeclampsia and Stroke Risk: Long-Term EffectsPreeclampsia (PE) – a hypertensive disorder.

Statins and Depression: No Added Benefit

Statins and Depression: No Added BenefitWhat Are Statins Used For? Statins.

Azithromycin Resistance Rises After Mass Treatment

Azithromycin Resistance Rises After Mass TreatmentMass drug administration (MDA) of azithromycin.

Generative AI in Health Campaigns: A Game-Changer

Generative AI in Health Campaigns: A Game-ChangerMass media campaigns have long been.

Molecular Stress in Aging Neurons Explained

Molecular Stress in Aging Neurons ExplainedAs the population ages, scientists are.

Leave a Comment